The Catalog Tool

The Catalog Tool allows you browse, search and discover data in, and connected to, the Oracle Autonomous AI Database.

The Autonomous AI Database Catalog is a multi-catalog tool that provides a way to search for data and other objects in your currently connected Autonomous AI Database, and also in a wide range of other connected systems.

The Catalog tool enables you to search, find, load, or link connected data assets from anywhere in the cloud and beyond. It also enables you to mount new catalogs over other systems and search for data and other items in the connected Autonomous AI Database.

- Other Autonomous AI Databases in your tenancy.

- Any other database that can be connected using a DB Link, for example an on-premises database that your Autonomous AI Database can connect to.

- Shared data, for example data shared from DataBricks using Delta Sharing.

- Existing external data catalogs such as AWS Glue, or the OCI Data Catalog.

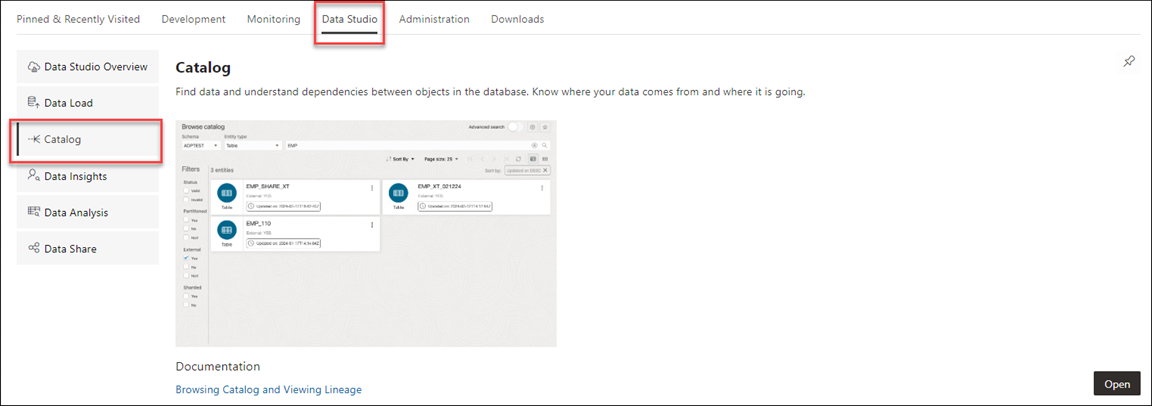

To reach the Catalog tool, select the Catalog menu in the Data Studio tab of the Launchpad.

Description of the illustration catalog.png

or click the Selector![]() icon and select Catalog from the Data Studio menu in the navigation pane.

icon and select Catalog from the Data Studio menu in the navigation pane.

If you do not see the Catalog card then your database user is missing the required DWROLE role.

DWROLE to the user:GRANT DWROLE TO ANALYST;This chapter will take you through the features, capabilities, and technical architecture of the Catalog tool.

Enable the multi catalog feature in the tool

To enable the multi catalog feature in this tool, you need to have the ORACLE_CATALOGS role.

Until the user has ORACLE_CATALOGS role granted, they are limited to mounting only the local, connected Autonomous AI Database catalog.

ORACLE_CATALOGS role is missing, run the following command as an admin to grant the ORACLE_CATALOGS role to the user:CREATE ROLE ORACLE_CATALOGS;

GRANT ORACLE_CATALOGS TO USER;The following topics describe the Catalog tool and how to use it.

- About the Catalog Page

Use the Catalog Tool to browse, search and discover data either in your Oracle Autonomous AI Database, or connected to it and get information about the entities in and available to your Oracle Autonomous AI Database. You can see the data in an entity, the sources of that data, the objects that are derived from the entity, and the impact on derived objects from changes in the sources. - Browse and Search Catalogs

The Catalog Tool aims to allow you to find data quickly and easily, whether or not the data is currently resident in the connected Autonomous AI Database. - Sorting and Filtering Entities

On the Catalog page, you can sort and filter the displayed search results. - Filter Entities

By selecting different quick filters, the faceted results of the entity types also vary. - Display Entity Search

If you do not select a quick filter, the Tables and Views filter is selected by default. - Catalog Settings

The Catalog Settings can manage the general settings, add, and view query scopes, create and view saved searches and change the look and feel of the Catalog page. - Manage Catalogs

You can now enable multiple catalogs using the Catalog Tool. - Viewing Entity Details

When you have found an entity you are interested in, you can click the entity to view its details, or click one of the other available actions from the menu that appears on the right hand side when you hover over that row. - Registering Cloud Links to Access Data

Cloud Links enable you to remotely access read only data on an Autonomous AI Database instance. - Export Data to Cloud

Use the Export Data to Cloud menu in the table actions of the Browse Catalog page to export data as text from an Autonomous Database to a cloud Object Store. The text format export options are CSV, JSON, Parquet, or XML. - Gathering Statistics for Tables

You can generate statistics that measure the data distribution and storage characteristics of tables. - Editing Tables

You can create and edit objects using Edit Table wizard available from the Edit menu in Actions (three vertical dots) besides the table entity.

Parent topic: Analyze