Parse Log Records with Multiple Timestamps

Some log records can have multiple timestamps like the log entry

time, the start time, and end time of a process or transaction that you may want to

capture into their own fields. After capturing the start and end time into their own

fields, you can use the eval command to perform date manipulation

on such fields, for example, to get the duration between the two times. See eval.

Oracle Log Analytics has several Oracle-defined fields that let you store time and date information that are not the actual log entry time:

-

Event End Time

-

Event Start Time

-

Event Generation Time

-

First Event Time

-

Contact End Time

-

Contract Start Time

-

Alert Raised Time

-

Collection Time

-

Detected Time

The data that is stored into these fields must be in the ISO-8601 format:

2018-07-04T23:43:34.000ZWhile creating

the parser, you can use the {TIMEDATE} macro only once to express

the log entry time. For the additional time fields, you must extract the data using

one of the methods below depending on your use case.

Case 1: Your log already has the time and date information in ISO-8601 format

If your log already has additional time and date information in ISO-8601 format, then you can extract them as strings in the base parser of Extended Field Definition.

Consider the following example log:

July 4, 2018 23:43:12 Server1 ModuleA Transaction completed. Start=2018-07-04T23:45:34.000Z, End=2018-07-04T23:46:39.000ZThe log contains time and date information for log entry, start time, and end time.

-

To obtain the log entry time, create the base parser. See Create a Parser.

{TIMEDATE}\s(\S+)\s(\S+)\s(.*) -

Open the Create Source dialog box. See Create a Source.

-

Select the base parser that you created in step 1.

-

Provide the file path for the example log.

-

In the Extended Fields tab, add the extended field definitions to the log source to extract the time and date fields:

-

From Message field:

Start={Event Start Time:\S+} -

From Message field:

End={Event End Time:\S+}

-

-

Save the new source that you created.

You’ll now notice that the two fields Event Start Time and Event End Time are populated with the values from the log. In the Log Explorer, you can see the times as milliseconds since epoch.

Case 2: Your log does not have the time and date information in ISO-8601 format

If the additional time fields that you want to extract are not in the ISO-8601 format, then you must follow these steps for parsing:

Consider the example log file where the entire file is a single log entry:

+---------------------------------------------------------------------------+

Application Object Library: Version : 12.2

Copyright (c) 1998, 2013, Oracle and/or its affiliates. All rights reserved.

FNDWFBG: Workflow Background Process

+---------------------------------------------------------------------------+

Current system time is 04-JUL-2018 17:25:23

+---------------------------------------------------------------------------+

**Starts**04-JUL-2018 17:25:23

**Ends**04-JUL-2018 18:25:23

+---------------------------------------------------------------------------+

Start of log messages from FND_FILE

+---------------------------------------------------------------------------+

+---------------------------------------------------------------------------+

End of log messages from FND_FILE

+---------------------------------------------------------------------------+

Successfully resubmitted concurrent program FNDWFBG with request ID 239834523 to start at 04-JUL-2018 18:30:23 (ROUTINE=IERKWEP)

+---------------------------------------------------------------------------+

No completion options were requested.

Output file size:

0

Output is not being printed because:

The print option has been disabled for this report.

+---------------------------------------------------------------------------+

Concurrent request completed successfully

Current system time is 04-JUL-2018 18:30:23

+---------------------------------------------------------------------------+-

Create the base parser. See Create a Parser.

.*?Current system time is {TIMEDATE}.*\*\*Starts\*\*([\S\ ]+).*?\*\*Ends\*\*([\S\ ]+).*The fields that you must select for parsing are:

-

Version

-

Event Start Time

-

Event End Time

For the above example log, select the Handle entire file as a single log entry check box. No header regex is required.

-

-

Open the Create Log Source dialog box. See Create a Source.

-

Select the base parser that you created in step 1.

-

Provide the file path for the example log.

-

Navigate to Data Filters tab.

-

Convert Month Short Name to Number:

If your log already has a numeric month number instead of a month name, then you can skip this step and go to step 7.

If your log has the short name for the month instead of the month number, then, to convert the month short name to month number, add twelve data filters of the type MASK.

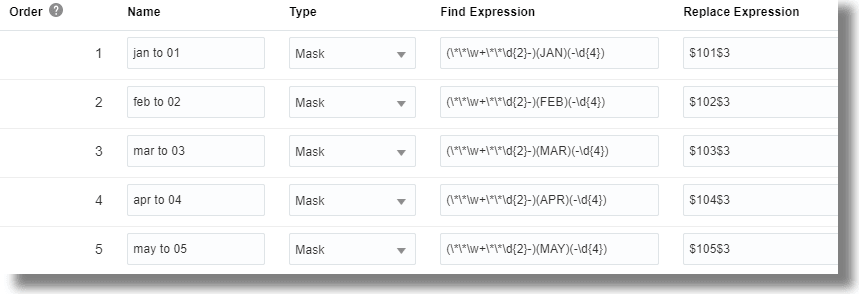

For each calendar month, the data filter will have similar details as for January month below:

-

Name:

Jan to 01 -

Type:

Mask -

Find Expression:

(\*\*\w+\*\*\d{2}-)(JAN)(-\d{4}) -

Replace Expression:

$101$3

The data mask finds occurrences of the time pattern in the log:

**Starts**04-JAN-2018 17:25:23It captures the data before

JAN, the valueJAN, and the data afterJANinto three capture groups. The capture groups are indicated with the three pairs of parentheses( ).Then in the replace expression, the value from the first capture group is replaced using

$1, the valueJANis replaced with01, and the third capture group is replaced using$3.After the data filter is implemented, the time and data information appears as follows:

**Starts**04-01-2018 17:25:23 -

-

Rewrite the time and date information in ISO-8601 format:

Now that the time and date information is available in the right data type, rewrite the time and date data to be in the ISO-8601 format using two data filters for the example log:

These two data filters must be positioned after the twelve data filters that you created to convert month short name to month number. This’ll ensure that the time and date data format is evaluated after the month short name is converted to month number. Use the up and down arrows to change the order of the data filters.

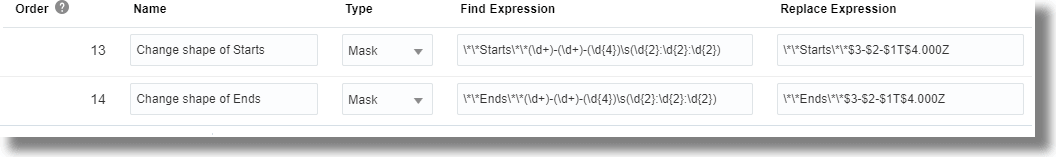

Create the following two data filters to convert the start time and end time data to ISO-8601 format:

-

-

Name:

Change shape of Starts -

Type:

Mask -

Find Expression:

\*\*Starts\*\*(\d+)-(\d+)-(\d{4})\s(\d{2}:\d{2}:\d{2}) -

Replace Expression:

\*\*Starts\*\*$3-$2-$1T$4.000Z

-

-

-

Name:

Change shape of Ends -

Type:

Mask -

Find Expression:

\*\*Ends\*\*(\d+)-(\d+)-(\d{4})\s(\d{2}:\d{2}:\d{2}) -

Replace Expression:

\*\*Ends\*\*$3-$2-$1T$4.000Z

-

In the find expression, each element of time and date is captured. In the replace expression, the order of the time and date elements are changed. The values

$1,$2,$3, and$4correspond to the capture groups in the find expression. The capture groups are indicated with the pairs of parentheses( ).The static

.000Zis added in the replace expression to store the time and date value in the field. This effectively stamps the time and date in Coordinated Universal Time (UTC) time zone. If your log entry instead was in Pacific Standard Time (PST) time zone, then its time zone is artificially changed to UTC, but without the actual shift in the hour.Note

Currently, it is not possible to shift the time and date value into a different time zone at ingest time. But you can do this from the Log Explorer by using the eval command:-

After you have stored the event start and event end time, subtract the event end time from the event start time to get the event duration.

-

Add or subtract the duration of time difference between the time zones calculated in milliseconds.

-

Convert the output from number of milliseconds to the time and date format.

-

-

In the Extended Fields tab, add the extended field definitions to the log source to extract the time and date fields:

-

From Message field:

\*\*Starts\*\*{Event Start Time:\S+} -

From Message field:

\*\*Ends\*\*{Event Start Time:\S+}

-

-

Save the new log source that you created.

You can now notice that the two fields Event Start Time and Event End Time are populated with the date and time values from the log. In the Log Explorer, you can see the times as milliseconds since epoch.