Set Up Identity and Access Management Policies

Data Flow requires common policies to be set up in Identity and Access Management (IAM) to manage and run Spark applications.

- dataflow-service-level-policy

- dataflow-admins-policy

- dataflow-data-engineers-policy

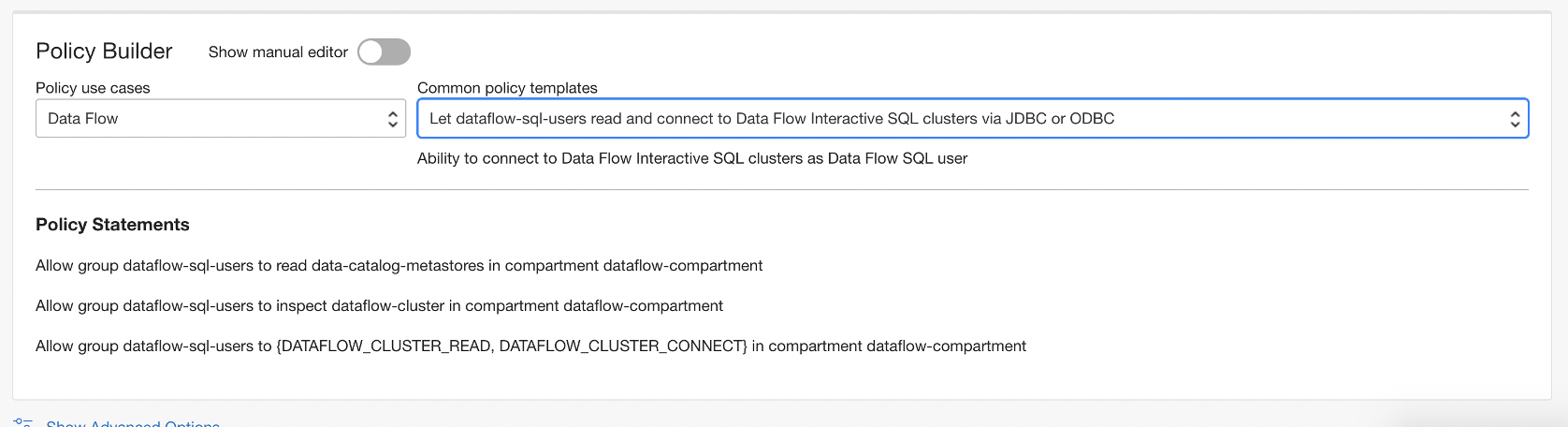

- dataflow-sql-users-policy

Data Flow Policy Templates

Data Flow has four Common Policy Templates. They are listed in the order in which you need to create the policies.

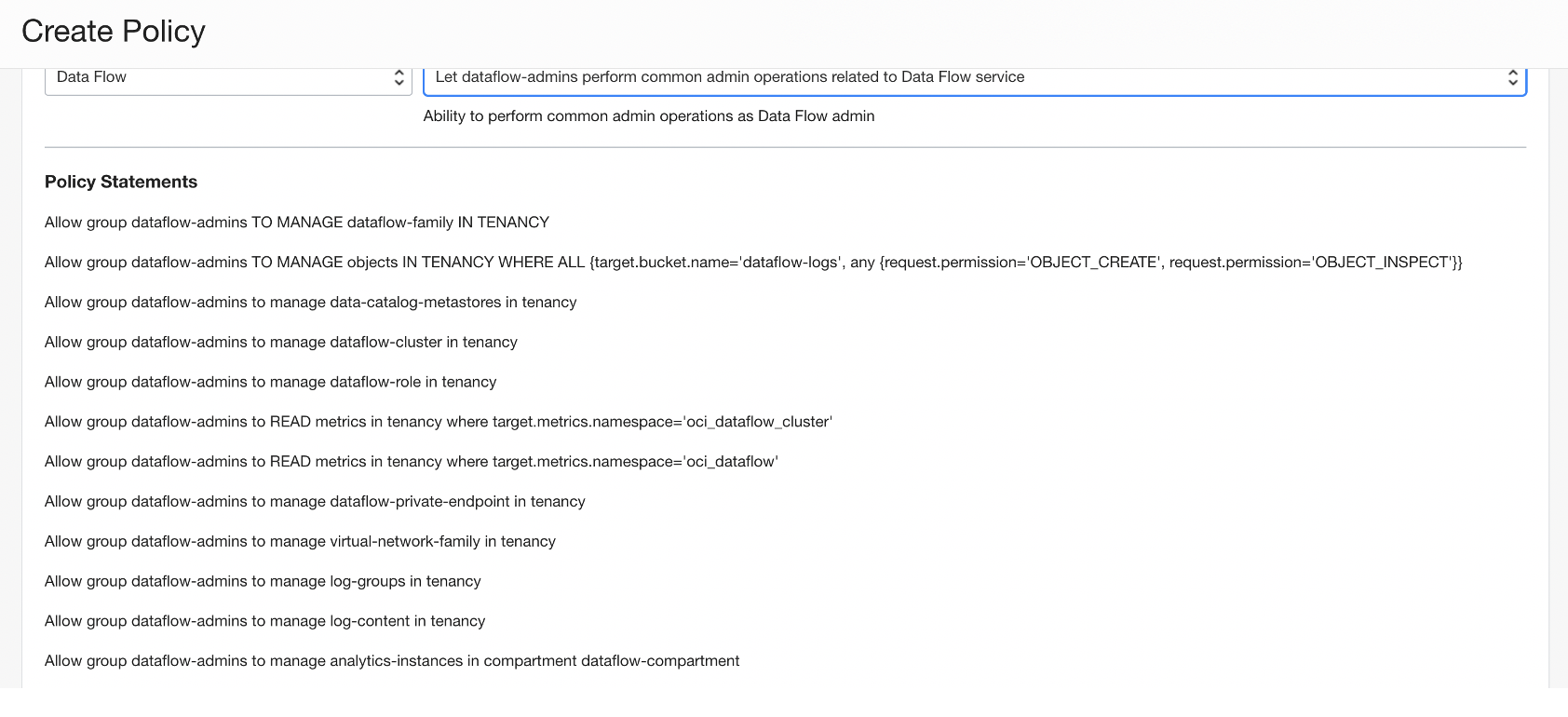

- Let Data Flow admins manage all Applications and Runs

- For administration-like users (or super users) of the service who can take any action on the service, including managing applications owned by other users and runs started by any user within their tenancy subject to the policies assigned to the group

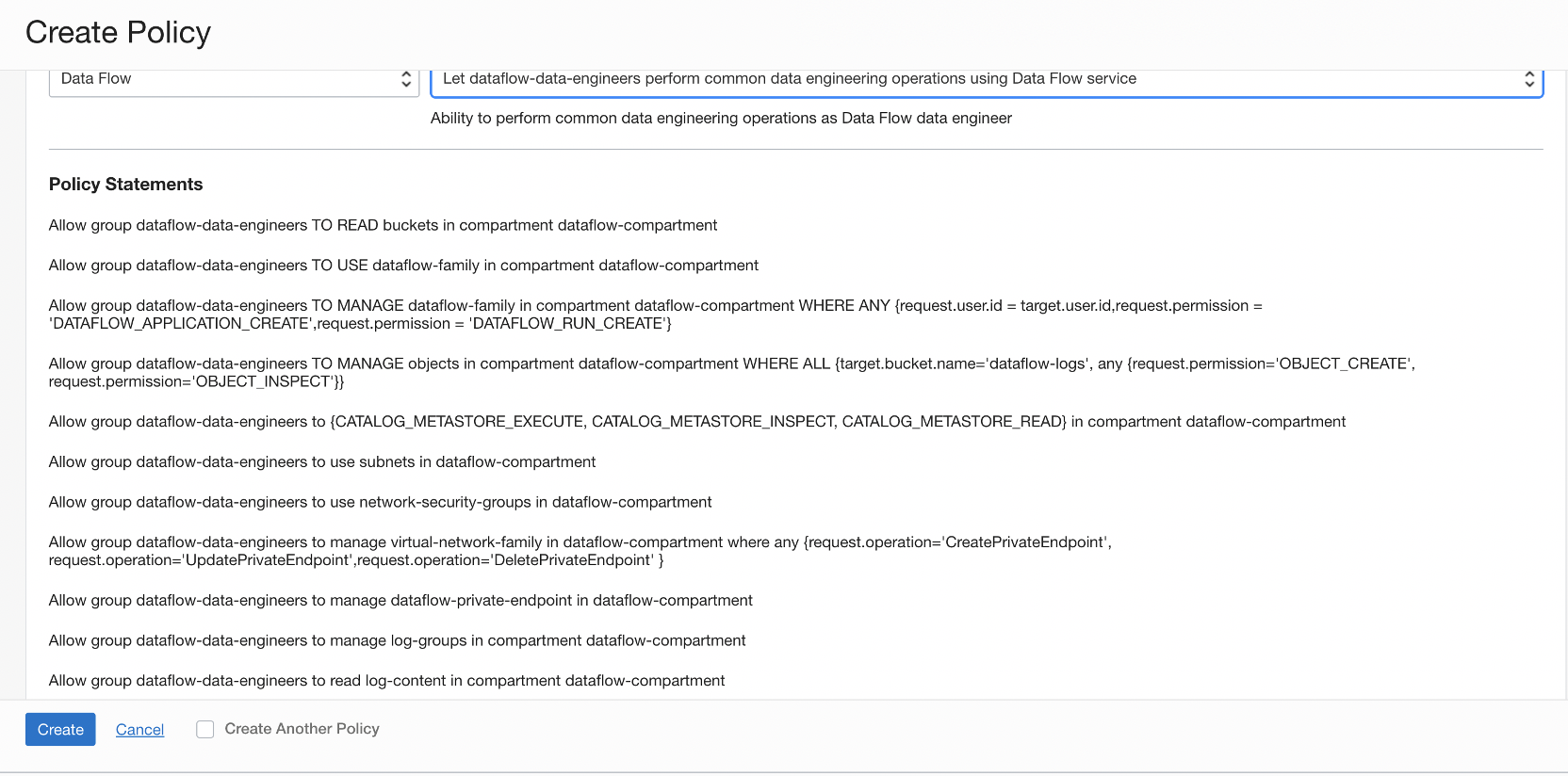

- Let Data Flow users manage their own Applications and Runs.

- All other users who are only authorized to create and delete their own applications. But they can run any application within their tenancy, and have no other administrative rights such as deleting applications owned by other users or canceling runs started by other users.

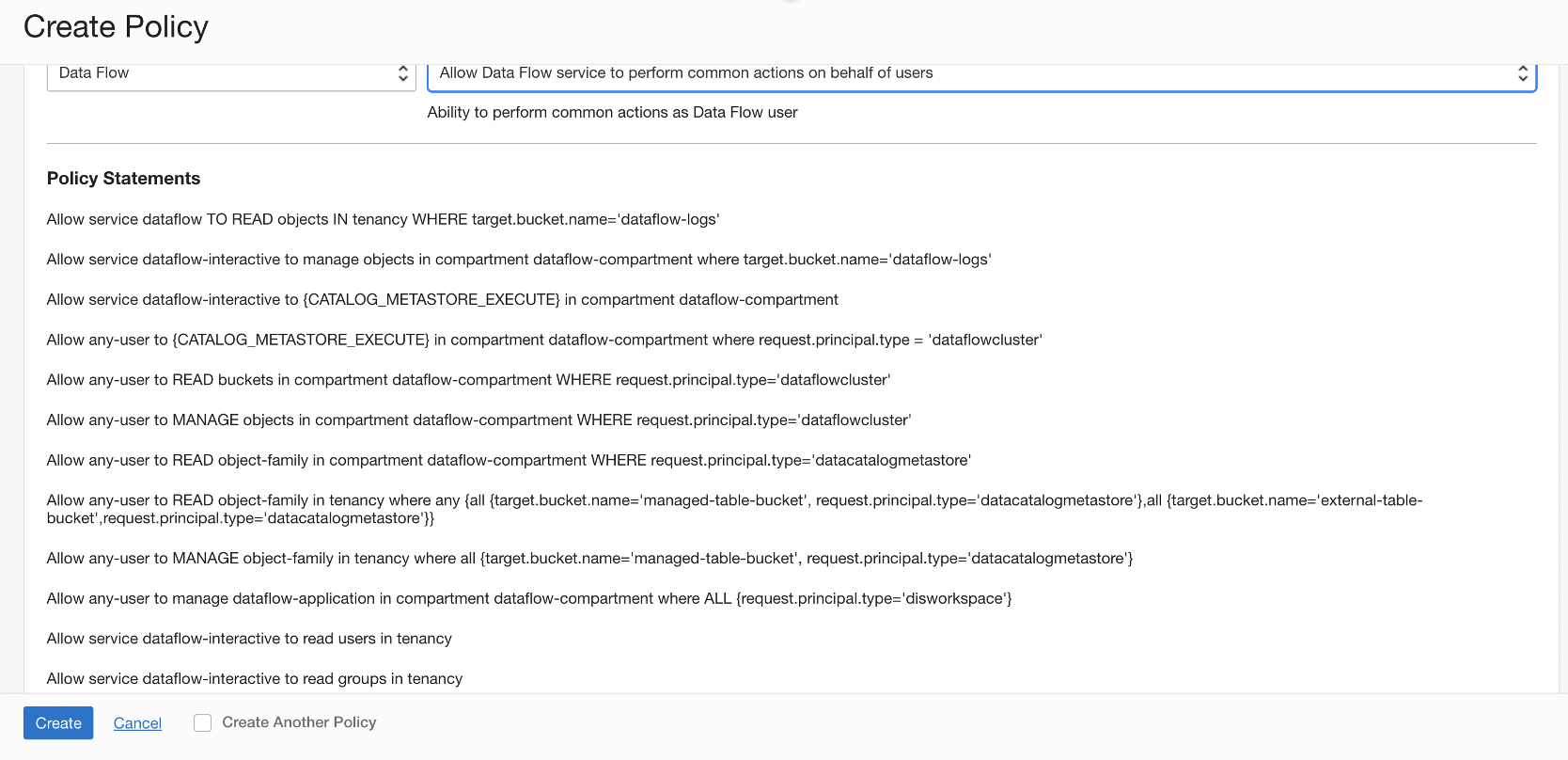

- Allow Data Flow service to perform actions on behalf of the user or group on objects within the tenancy.

- The Data Flow service needs permission to perform actions on behalf of the user or group on objects within the tenancy.

- (Optional) Allow Data Flow users to create, edit, or change private endpoints.

- This policy template allows use of the virtual-network-family, allows access to more specific resources, allows access to specific operations, and allows changing of the network configuration.

Creating Policies Using IAM Policy Builder Templates

Use the IAM Policy Builder templates to create your policies for Data Flow.

- From the navigation menu, select Identity & Security.

- Under Identity select Policies.

Manually Create Policies

Rather than using the templates in IAM to create the policies for Data Flow, you can create them yourself in IAM Policy Builder.

Following the steps in Managing Policies in IAM with Identity Domains or without Identity Domains to manually create the following policies:

- For administration-like users (or super users) of the service who can take any

action on the service, including managing applications owned by other users and

runs started by any user within their tenancy subject to the policies assigned

to the group:

- Create a group in your identity service called

dataflow-adminand add users to this group. - Create a policy called

dataflow-adminand add the following statements:ALLOW GROUP dataflow-admin TO READ buckets IN <TENANCY>ALLOW GROUP dataflow-admin TO MANAGE dataflow-family IN <TENANCY>ALLOW GROUP dataflow-admin TO MANAGE objects IN <TENANCY> WHERE ALL {target.bucket.name='dataflow-logs', any {request.permission='OBJECT_CREATE', request.permission='OBJECT_INSPECT'}}

dataflow-logsbucket. - Create a group in your identity service called

- The second category is for all other users who are only authorized to create and

delete their own applications. But they can run any application within their

tenancy, and have no other administrative rights such as deleting applications

owned by other users or canceling runs begun by other users.

- Create a group in your identity service called

dataflow-usersand add users to this group. - Create a policy called

dataflow-usersand add the following statements:ALLOW GROUP dataflow-users TO READ buckets IN <TENANCY>ALLOW GROUP dataflow-users TO USE dataflow-family IN <TENANCY>ALLOW GROUP dataflow-users TO MANAGE dataflow-family IN <TENANCY> WHERE ANY {request.user.id = target.user.id, request.permission = 'DATAFLOW_APPLICATION_CREATE', request.permission = 'DATAFLOW_RUN_CREATE'}ALLOW GROUP dataflow-users TO MANAGE objects IN <TENANCY> WHERE ALL {target.bucket.name='dataflow-logs', any {request.permission='OBJECT_CREATE', request.permission='OBJECT_INSPECT'}}

- Create a group in your identity service called

These policies allow you to use Oracle Cloud Infrastructure Logging with Data Flow.

log-group resource-type, but to

search the contents of logs, you must use the log-content resource-type. Add

the following

policies:allow group dataflow-users to manage log-groups in compartment <compartment_name>

allow group dataflow-users to manage log-content in compartment <compartment_name>

Setting Up a Policy for Spark Streaming

To use Spark Streaming with Data Flow, you need more than the common policies.

You must have created the common policies either using the IAM Policy Builder templates or manually.

You can use the IAM Policy Builder to manage access to the sources and sinks your streaming applications consume from or produce to. For example, the specific stream pool or the specific Object Storage bucket which are at a location you pick. Or you can follow these steps to create a policy manually: