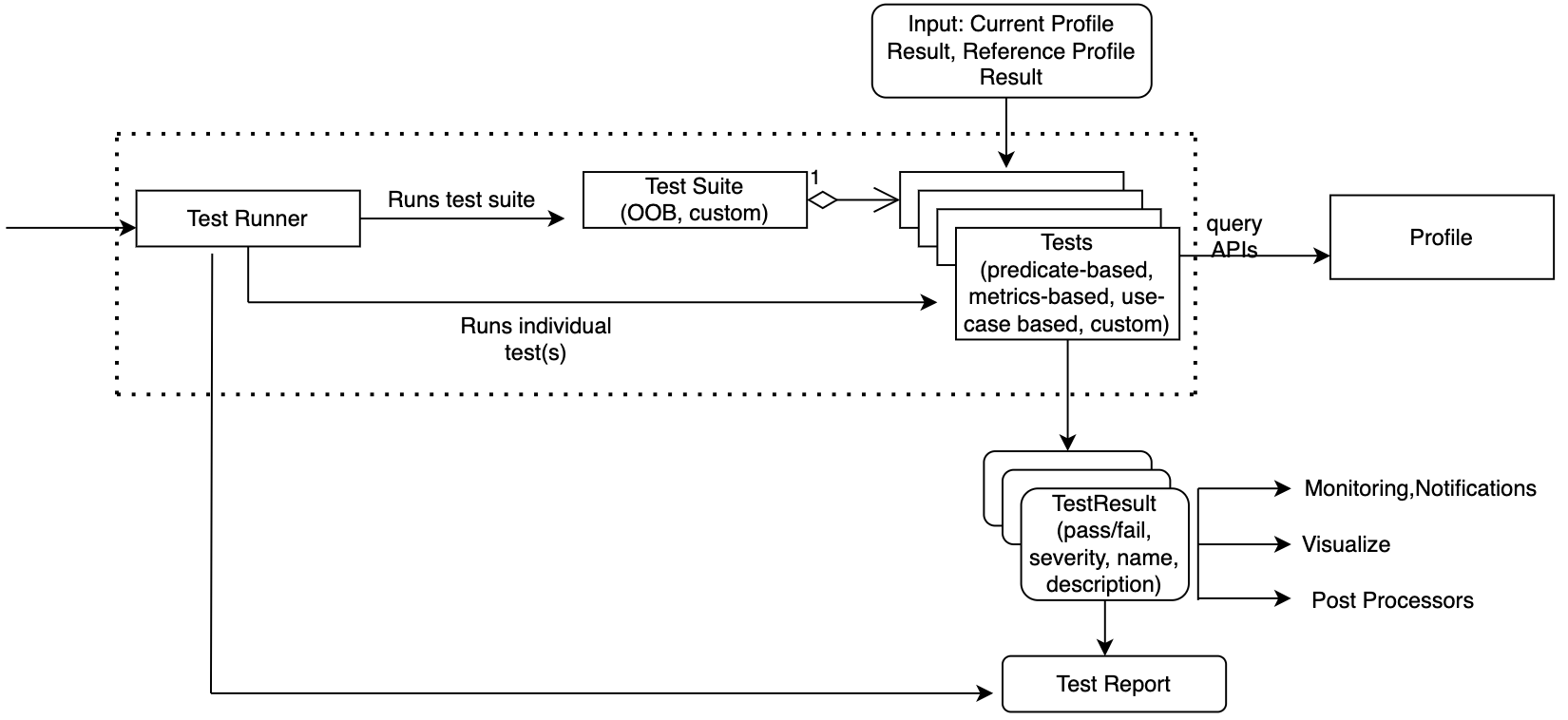

Test/Test Suites Component¶

Up until now, we have seen Profile computation which produces the metrics results. As a machine learning engineer/data scientist, I would like to apply certain tests/checks on the metrics to understand if the metric values are breaching certain thresholds. For e.g.: I might want to be alerted if the Minimum metric of a feature is beyond a certain threshold or Q1 Quartiles for a feature in a prediction run is not within the threshold of the corresponding value in reference profile or for a classification model the precision score is within 10% deviation from the precision score of the baseline run.

Insights Test/Test Suites enables comprehensive validation of customer’s machine learning models and data via a suite of test and test suites for various types of use cases such as :

Data Integrity

Data Quality

Model Performance (Classification, Regression)

Drift

Correlation, etc.

They provide a structured / easier way to add thresholds on metrics. This can be used for Notifications and alerts for continuous Model Monitoring allowing them to take remediative actions

How it works¶

User has created Baseline/Prediction Profile(s).

User works with Test Suites, Tests, Test Condition, Threshold , Test Results and Test Report.

Insights Test Suites are composed of Insight Tests.

- Each Test has:

Test Condition (implicit or user provided). An example of user provided is >=, <=, etc. Implicit is used when running tests for a specific metric.

Threshold (either user provided or captured from reference profile). For eg: user can provide a value of 200 when evaluating Mean of a feature with greater than test

Test Configuration. Each test can take a test-specific config which tweaks its behavior. For eg: when using TestGreaterThan , user can decide whether to do a > or >= by setting appropriate config.

- Tests are of various types allowing flexibility and ease of use:

Custom Tests. Users can write tests specific to their needs if the library-provided tests do not meet their unique requirements.

Tests can be added/edited/removed to/from a Test Suite;

Tests Suites can be consumed using Out-of-thebox Test Suites, created from scratch or composed from an existing suite

- Tests/Test Suites are executed producing test evaluation results. Each test evaluation results consists of:

Test Name

Test Description

Test Status (Pass/Fail/Error)

Test Assertion (expected v actual)

System Tags

User-defined Tags

Test Configuration (if any)

Test Errors (if any)

Test results can be stored in a customer provided bucket.

Further post processors can be added to push the alerts to oci monitoring based on each test evaluation result

API¶

- Test/Test Suites API is available for use via 4 primary ways:

Insights Builder API

Insights Test Builder API

Insights Config API to get insights builder

Insights Config API to get test builder

Using Insights Builder API¶

- Insights Test/Test Suites can be executed as part of an Insights run by using the builder API’s

with_test_config API to setup the test

with_reference_profile API to setup the reference profile

Begin by loading the required libraries and modules:

from mlm_insights.builder.insights_builder import InsightsBuilder

Create Insights builder using required components, test config and reference profile (optional)

# load the reference profiles using either `ProfileReader` or profile object

test_config = TestConfig(tests=test)

run_result = InsightsBuilder(). \

with_input_schema(input_schema). \

with_data_frame(data_frame=data_frame). \

with_test_config(test_config). \

with_reference_profile(reference_profile=reference_profile). \

build(). \

run()

Test result can be extracted from the run result object.

test_result = run_result.test_results

Using Insights Config API to get insights builder¶

Insights Test/Test Suites can be executed as part of an Insights run.

Begin by loading the required libraries and modules:

from mlm_insights.config_reader.insights_config_reader import InsightsConfigReader

Create Insights builder using config, test config and reference profile (optional)

run_result = InsightsConfigReader(config_location=test_config_path)

.get_builder()

.build()

.run()

Test result can be extracted from the run result object.

test_result = run_result.test_results

Using Insights Config API to get test builder¶

Along with the library provided APIs, Insights Test/Test Suites can be set up and customized by authoring and passing a JSON configuration. This section shows how to use the Insights configuration reader to load the config and run the tests

Begin by loading the required libraries and modules:

from mlm_insights.config_reader.insights_config_reader import InsightsConfigReader

Create Test Context by providing current profile and reference profile (optional)

from mlm_insights.tests.test_context.interfaces.test_context import TestContext

from mlm_insights.tests.test_context.profile_test_context import ProfileTestContext

# load the current and reference profiles using either `ProfileReader` or custom implementations

def get_test_context() -> TestContext:

# Create a test context and pass the profiles loaded in the step above

return ProfileTestContext(current_profile=current_profile,reference_profile=reference_profile)

Initialize InsightsConfigReader by specifying the location of the monitor config JSON file which contains test configuration

from mlm_insights.config_reader.insights_config_reader import InsightsConfigReader

test_results = InsightsConfigReader(config_location=test_config_path)

.get_test_builder(test_context=get_test_context())

.build()

.run()

Process the test_results to group them by test status/features or send them to Insights Post Processors for further usage.

Lets see an example of grouping the test_results by test status

from mlm_insights.tests.constants import GroupByKey

grouped_results = test_results.group_tests_by(group_by=GroupByKey.TEST_STATUS_KEY)

Insights Test Types¶

Before we take a deep dive into the test configuration schema, this section explains the Test Types. Currently, Insights supports the following Test Types:

Predicate-based Tests

Metric-based Tests

Predicate-based Tests¶

General-purpose test to evaluate single conditions against a single metric for a feature.

- Each test provides a single predicates (test condition) of the formlhs <predicate> rhs

For eg: lets consider a test to evaluate whether Mean of a feature is greater than 100.23. In this case: - lhs is the value of Mean metric, - rhs is 100.23, - <predicate> is greater than (>=)

For eg: TestGreaterThan is a predicate-based test which tests if a metric value is greater than a specific threshold

For a list of all predicate-based tests and their examples, please refer to section: Predicate-based Tests

Allows fetching the compared value (rhs) from a dynamic source such as a reference profile

Metric-based Tests¶

Tests specific to an Insights metric

Has in-built metric key and test condition

For eg: TestIsPositive is a metric-based test which works on the IsPositive metric only and tests if a feature has all positive values

For a list of all metric-based tests and their examples, please refer to section: Metric-based Tests

When no threshold values are provided, fetches the built-in metric values from reference profile

Note

The metric associated with any metric-based or predicate-based test that is configured by the user must be present in the Profile. For e.g.: The Count metric should be present in the profile if user wishes to run TestIsComplete test.

If the metric associated with a particular metric-based or predicate-based test is not found during test execution, the test’s status is set to ERROR and error details are added to the test result.

Understanding Test Configuration¶

Insights Tests can be provided in a declarative fashion using JSON format. All the tests need to be defined under a new key test_config in Insights Configuration.

{

"input_schema": {...},

// other components go here

"test_config": {<all tests are defined under this>}

}

We will now look at the details of the test_config key in the sections below.

Defining Feature Tests¶

All Insights Tests for a specific feature need to be defined under feature_metric_tests key. The general structure is as below:

{

"test_config": {

"feature_metric_tests": [

{

"feature_name": "Feature_1",

"tests": [

{

// Each test is defined here

}

]

},

{

"feature_name": "Feature_2",

"tests": [

{

// Each test is defined here

}

]

}

]

}

}

Note

The feature name provided in the feature_name key must be present in the Profile i.e it should come from features defined in either input_schema or via conditional features

If the feature provided in feature_name is not found during test execution, the test’s status is set to ERROR and error details are added to the test result

Defining Dataset Tests¶

All Insights Tests for the entire dataset need to be defined under dataset_metric_tests key.

Dataset metric tests are evaluated against Dataset metrics.

The general structure is as below:

{

"test_config": {

"dataset_metric_tests": [

{

// Each test is defined here

},

{

// Each test is defined here

}

]

}

}

Defining Predicate-based Tests¶

A Predicate-based test is defined in feature_metric_tests under tests key and in dataset_metric_tests.

The general structure is as shown below:

{

"test_name": "",

"metric_key": "",

"threshold_value": "<>",

"threshold_source": "REFERENCE",

"threshold_metric": "",

"tags": {

"key_1": "value_1"

},

"config": {}

}

The details of each of the above properties are described below:

Key |

Required |

Description |

Examples |

|---|---|---|---|

test_name |

Yes |

|

TestGreaterThan |

metric_key |

Yes |

{

metric_name: 'Quartiles',

variable_names: ['q1', 'q2', 'q3'],

// other details omitted for brevity

}

|

Min, Quartiles.q1 |

threshold_value |

Yes, if threshold_metric is not provided. Otherwise No |

|

100.0, [200, 400] |

threshold_source |

No |

|

Always set to REFERENCE |

threshold_metric |

Yes, if threshold_value is not provided. Otherwise No |

|

Min, Quartiles.q1 |

tags |

No |

|

"tags": {

"key_1": "value_1"

}

One can provide multiple tags in the above format. |

Defining Metric-based Tests¶

A Metric-based test is defined in feature_metric_tests under tests key.

The general structure is as shown below:

{

"test_name": "",

"threshold_value": "<>",

"tags": {

"key_1": "value_1"

}

}

The details of each of the above properties are described below:

Key |

Required |

Description |

Examples |

|---|---|---|---|

test_name |

Yes |

|

TestNoNewCategory |

threshold_value |

No |

|

100.0, [200, 400] |

tags |

No |

|

"tags": {

"key_1": "value_1"

}

One can provide multiple tags in the above format. |

List of Available Tests¶

Predicate-based Tests¶

Test Name |

Test Description |

Test Configuration |

Examples |

|---|---|---|---|

TestGreaterThan |

|

|

Tests whether RowCount metric > RowCount of reference profile

|

TestLessThan |

|

|

Tests whether Min metric of a feature < Median metric of the same feature

Tests whether Min metric of a feature < p25 i.e Q1 metric of the same feature

Tests whether Min metric of a feature < p25 i.e Q1 metric of reference profile

|

TestEqual |

|

None |

Tests whether Min metric of a feature = 7500. {

"test_name": "TestEqual",

"metric_key": "Min",

"threshold_value": 7500

}

|

TestIsBetween |

|

|

Tests whether Min metric of a feature lies within the range 7500 to 8000

|

TestDeviation |

|

|

{

"test_name": "TestDeviation",

"metric_key": "Mean",

"config": {

"deviation_threshold": 0.10

}

}

|

Metric-based Tests¶

Test Name |

Test Description |

Test Configuration |

Metric |

Examples |

|---|---|---|---|---|

TestIsComplete |

|

None |

Count |

Tests whether completion percentage of a feature >= 95% i.e 95% of values are non-NaN

Tests whether completion % of a feature >= completion % of the feature in reference profile

|

TestIsMatchingInferenceType |

|

None |

TypeMetric |

Tests whether type of a feature is Integer

Tests whether type of a feature matches the type in reference profile

|

TestIsNegative |

|

None |

IsNegative |

{

"test_name": "TestIsNegative"

}

|

TestIsPositive |

|

None |

IsPositive |

{

"test_name": "TestIsPositive"

}

|

TestIsNonZero |

|

None |

IsNonZero |

{

"test_name": "TestIsNonZero"

}

|

TestNoNewCategory |

|

None |

TopKFrequentElements |

Tests whether categories in a feature match the threshold_value list values

Tests whether categories in a feature match the `values present in reference profile

|

Test Results¶

In this section, we will describe the test results returned after the test execution. Code is shown as below.

from mlm_insights.config_reader.insights_config_reader import InsightsConfigReader

test_results = InsightsConfigReader(config_location=test_config_path)

.get_test_builder(test_context=get_test_context())

.build()

.run()

- test_results is an instance of type TestResults which returns two high-level properties:

Test Summary

List of test result for each configured test

Test Summary¶

- Test Summary returns the following information about the executed tests.

Count of tests executed

Count of passed tests

Count of failed tests

- Count of error tests

Tests error out when the test validation fails or error is encountered during test execution

Test Result¶

Each test returns a result in standard format which includes the following properties:

Key

Description

Example

name

Name of the test

TestGreaterThan, TestIsPositive

description

Test description in a structured format

- For predicate-based tests, the descriptions are structured in the following formats depending on test configuration.

The <metric> of feature <feature> is <metric value>. Test condition : <lhs> [predicate condition] <rhs>

The <metric 1> of feature <feature> is <metric value>. <metric 2> of feature <feature> is <metric value>. Test condition : <lhs> [predicate condition] <rhs>

The <metric 1> of feature <feature> is <metric value>. <metric 1> of feature <feature> is <metric value> in Reference profile. Test condition is <lhs> [predicate condition] <rhs>

The <metric 1> is <metric value>. <metric 1> is <metric value> in Reference profile. Test condition is <lhs> [predicate condition] <rhs>

The Min of feature feature_1 is 23.45. Test condition : 23.45 >= 4.5

The Min of feature feature_1 is 23.45. Median of feature feature_1 is 34.5. Test condition : 23.45 >= 34.5

The Min of feature feature_1 is 23.45. Min of feature feature_1 is 4.5 in Reference profile. Test condition is 23.45 >= 4.5

The RMSE is 23.45. RMSE is 12.34 in Reference profile. Test condition is 23.45 >= 12.34

The Min of feature feature_1 is 23.45. Test Condition: 23.45 deviates by +/- 4% from 1.2

status

Each test when executed produces a status which is one of the following: PASSED, FAILED, ERROR

When test passes a given condition, status is set to PASSED

When test fails a given condition, status is set to FAILED

When test exeuction encounters an error, status is set to ERROR

Test Assertion Info

Each test returns the expected and actual information which helps in understanding why a particular passed/failed

error

When a test encounters error(s) during its execution, returns an error description

Test Results Grouping¶

Test Results can be grouped by supported keys. This helps in arranging the tests for easier visualization, processing and sending to downstream components. Currently supported group by keys are:

Feature

Test Status

Test Type

Below are some code snippets along with the grouped results

from mlm_insights.tests.constants import FEATURE_TAG_KEY, TEST_TYPE_TAG_KEY, GroupByKey, TEST_STATUS_KEY

# Group by features

grouped_results_by_features = test_results.group_tests_by(group_by=GroupByKey.FEATURE_TAG_KEY)

# Produces the grouped results as : {'feature_1' :[List of Test Result], 'feature_2' :[List of Test Result]}

# Group by test status

grouped_results_by_status = test_results.group_tests_by(group_by=GroupByKey.TEST_STATUS_KEY)

# Produces the grouped results as : {'PASSED' :[List of Test Result], 'FAILED' :[List of Test Result], 'ERROR' :[List of Test Result]}